Calit2's Video Processing Lab: Bringing 3D to the Operating Room

|

San Diego, Calif., Jan. 27, 2011 — For all of their high-tech advantages, laparoscopic surgical systems are only capable of providing a two-dimensional visualization — or in other words, no depth perception. “This means that often surgeons can’t pinpoint the exact location of an organ until they brush up against it with their tools,” explains Ramsin Khoshabeh, a Ph.D. candidate in Electrical and Computer Engineering at the University of California, San Diego. “I hate to put it this way, but some surgical procedures are still done by brute force.”

Khoshabeh and his colleagues in the Video Processing Laboratory (VPL) at the UC San Diego division of the California Institute for Telecommunications and Information Technology (Calit2) are working to bring three-dimensional video feeds into the operating room by leveraging the use of autostereoscopic displays. Their hope is that one day surgeons will not only be able to perform minimally invasive surgeries in 3D, they will be able to do so without having to wear potentially cumbersome 3D glasses.

“Surgeons are often slow to adapt to new technologies,” says Khoshabeh. “They worry that a new technology will be dangerous, that it will interfere with the procedure. They say, ‘I’ve been doing this the same way for 40 years; why change things?’

|

“To accommodate for all these types of mentalities, we are pitching 3D laparoscopic surgery as a supplement. It’s a way for surgeons to enhance their abilities, and it’s also a way for interns and everyone else in the room to learn from what they are doing by watching the video feed.”

UCSD Electrical Engineering Professor Truong Nguyen, an expert in digital signal processing, is the head of the VPL. Other collaborators include world-renowned UCSD surgeons Dr. Mark Talamini (chairman of the UCSD Department of Surgery) and Dr. Santiago Horgan (director of Minimally Invasive Surgery), as well as Dr. Horgan’s researcher, Dr. Noam Belkind. The work being done at the VPL — with collaboration from visiting scholars from all around the world — is at the forefront of research in 3D processing.

Researchers on the fourth and fifth floor lab space at Calit2’s Atkinson Hall focus their efforts on all aspects of 2D and 3D image and video processing, including 3D perceptual measures, image stabilization, frame-rate up-conversion, saliency detection the automatic tagging of regions in an image that would likely draw the attention of a human observer), motion estimation and compensation, coding, and object detection, extraction and tracking.

|

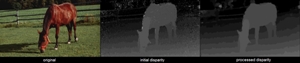

Since 3D stereo is actually comprised of two images (one for the left-eye view and one for the right-eye view), both images must be properly aligned in order for the viewer to accurately perceive the 3D effect. For many 3D applications, virtual views need to be synthesized from the existing two images to allow viewers to see a 3D scene from another perspective. When these virtual views are generated, however, previously hidden regions in the scene (perhaps occluded by an object in the foreground) become uncovered, which means that ‘holes’ appear in the virtual images. Khoshabeh and his colleagues in the VPL are looking at ways to fill in holes by extrapolating neighboring information from, say, the left-eye view to fill a hole in the right-eye view, or to improve the method for matching up points in one view to points in another view (also known as disparity estimation).

Notes Khoshabeh: “For humans, this is easy, but for computers this is a non-trivial task. And as you can imagine, this is very important in terms of surgery. If you have something that looks strange in a 3D laparoscopic feed, a surgeon might think it’s a tumor, but really, it’s just a poorly synthesized hole.”

“As the technology evolves, not only will the holes go away, surgeons will be able to see a real live 3D effect, where they can look behind something. But right now, that’s the tricky part — capturing what’s behind and providing surgeons with accurate visualization.”

|

Although it can create challenges in terms of creating a true 3D effect, Bal notes that extrapolating from one view to capture another is an effective technique for maximizing transmission efficiency — and that’s important when viewing and potentially sharing such large file sizes.

“Having two views means you’re dealing with twice the amount of data,” he explains. “It’s really dumb to send all that information twice when there’s so much overlap, so there are some intelligent things we can do to reduce the file size and bit rate.”

Parallels exist with current 2D video technologies, such as H.264 encoding, which uses motion estimation to correlate sequential video frames. Something similar can be done with 3D videos, except that, by contrast, they have angular correlation (between left and right views) and temporal correlation (between, say, frame ‘t’ and frame ‘t+1’). Khoshabeh says that fusing these two cues will be crucial to achieving high 3D coding efficiency in the compression and transmission of high-resolution 3D data.

The researchers are hoping that one day their algorithms will be standardized by the Moving Picture Experts Group (MPEG), a working group of experts that was formed to set standards for audio and video compression and transmission. Tran’s algorithm for virtual view synthesis is already the top performer in the field.

“But,” Khoshabeh says, “there’s still a lot of room for innovation.”

“The reason I got involved with this research is because I wanted to take general engineering practices and apply them to medicine,” he adds, noting that he intends to apply to UCSD’s Medical Scientist Training program. “Even though we’ve had so many breakthroughs, surgeons are still sewing people up just like they have for hundreds of years.

“The problem right now is that the engineers don’t know what the doctors need, and the doctors don’t know what the engineers are capable of doing. I want to bridge that gap. This work in 3D laparoscopy is only the beginning.”

Related Links

UCSD Video Processing Laboratory

Media Contacts

Tiffany Fox, tfox@ucsd.edu, (858) 246-0353