Encounters with 'The Thing That Should Not Be'

San Diego, Calif., July 21, 2011 -- It is not often that a scientific paper will cite both a Metallica song and an H.P. Lovecraft story, but they each expertly capture the essence of a hard-to-articulate phenomenon scientifically measured for the first time by an international team of researchers led by Ayse Saygin of the University of California at San Diego.

|

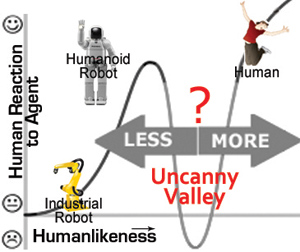

In genre-defining works, both the pioneering metal band and the horror novelist refer to ‘The Thing That Should Not Be’, a creature whose existence is perceived as unnatural, and unnerving. In the 1970s, legendary roboticist Masahiro Mori coined the term 'uncanny valley' (see side bar) to describe the uncomfortable feeling of encountering an android that, by most accounts, looks and acts human, but does not quite hit the mark.

“It is often easier to have a robot accepted by the general public if does not look so human-like,” says Saygin, a researcher affiliated with the UC San Diego division of the California Institute for Telecommunications and Information Technology (Calit2).

Roboticists and animators are keenly aware that the uncanny valley can spell certain doom for their androids or animated movie characters. However, it is often difficult to determine whether an android or animation will fall into the uncanny valley.

Saygin, an assistant professor of cognitive science and neuroscience at UCSD, and her collaborators used functional MRI (fMRI) scans of human subjects’ brain activity to make the first objective measurements of the uncanny valley effect.

“It is difficult to measure the uncanny valley. People can’t even agree on how to frame the question about what it is,” notes Saygin. “We were hoping to find a signature in either the brain or the body for the discomfort people feel.”

Such a neural or physiological signature may be tested for when designing a new robot, which is often a multi-million dollar endeavor.

Her research, funded in part by a grant from Calit2’s Strategic Research Opportunities program, was completed with colleagues in the UK, France, Denmark and Japan, and published in the Oxford University Press journal Social Cognitive and Affective Neuroscience.

The team identified a number of brain areas in the parietal cortex, known to be involved in processing body movements, that became significantly active when viewing videotaped movements of a robot that looks human, but moves like a robot.

“We think the brain expends more energy when encountering incongruent information, and this increased activity can be thought of as an ‘error signal,’” says Saygin.

Saygin explains that in terms of error signal, there is nothing special about parietal cortex per se. “If we presented conflicting auditory information, say a human with a robot voice or vice versa, we might see the same type of error signal in a network that includes auditory cortex.”

“We therefore think there is no central brain area that generates an error signal when processing conflicting information, but rather each network in the brain produces its own relevant error signal.”

Awards, follow-up studies, and new faces

Saygin originally began studying the uncanny valley theory in 2005 as a graduate student at UCSD. She was awarded a grant from the Kavli Institute for Brain and Mind to capture video of a robot in Japan (called Repliee Q2) and the human it was modeled after. This video was ultimately used in the fMRI study.

This June, Saygin received a Hellman fellowship, which is awarded to junior faculty members as they work towards securing tenure at UCSD. Saygin will use the $35,000 fellowship to acquire electroencephalography (EEG) equipment to perform follow up studies of the uncanny valley phenomenon.

EEG has the advantage over fMRI of measuring brain activity with very high temporal precision, whereas fMRI signals smear the record of the brain’s activity over several seconds, making it hard to say exactly when an area became active, and therefore, what exactly spurred the activity.

“EEG is a lot cheaper and more portable than an fMRI setup, making it accessible to more researchers and even people in the robotics industry,” adds Saygin.

“Also, in the fMRI study, our subjects were lying still on their backs watching a video. With EEG, more interactive studies are possible where subjects experience the ‘presence’ of the agent, be it human or android.”

To enhance that feeling of ‘presence’, Saygin will collaborate with researchers at UCSD’s Institute for Neural Computation (INC) to use EEG in INC’s virtual reality environments. Heading the EEG project under Saygin’s supervision is graduate student Burcu Urgen, who is a Calit2 research fellow. Urgen notes that previous work describes a unique signal in the EEG waveform indicative of a violation of one’s expectations that may further help pinpoint the brain’s processing of the uncanny valley.

|

Chan’s subjects will watch video of robot, android, and human gestures towards a visual target (by pointing and/or looking at it), and she will determine if the motion perception areas of the brain process the gestures differently according to which agent made the gesture.

Over the summer, two local high school students, Andrew Hostler and Jennifer Starkey, will work in Saygin’s lab on a survey study about the uncanny valley. They plan to enlist Amazon.com’s Mechanical Turk, a crowd-sourcing tool that co-ordinates the use of human intelligence in the performance of simple tasks. The goal will be to collect data from a large set of Internet users around the globe to determine how general the uncanny valley effect is, that is, whether a similar experience occurs when viewing a variety of incongruent images and videos.

This is the second summer that Saygin’s lab has participated in the Research Experience for High School Students (REHS), a volunteer internship program administered by the San Diego Supercomputer Center.

This past spring also brought other awards for Saygin and her lab. In May, Saygin won a Faculty Career Development Award, another source of support for junior faculty seeking tenure.

In June, Arthur Vigil was awarded a Chancellor’s undergraduate research fellowship to work on a project with character animation in the Saygin lab. Also in June, Saygin and Cognitive Science graduate student Luke Miller were awarded another $35,000 grant from the Kavli Institute of Brain and Mind to fund an fMRI study of the brain areas involved in monitoring one’s own or others’ use of tools.

Related Links

UCSD Cognitive Neuroscience and Neuropsychology Lab

Media Contacts

by Chris Palmer, 858-534-4763, or crpalmer@mail.ucsd.edu