UC San Diego Researchers Demonstrate Automotive SafeShield with Qualcomm

San Diego, April 18, 2016 — For the second time in three years, researchers from the Laboratory for Intelligent and Safe Automobiles (LISA) at the University of California San Diego were invited to showcase their computer vision-based technologies in connection with the Consumer Electronics Show (CES). In 2014, German automaker Audi followed up its presence at CES with a demonstration on the streets of San Francisco with a model equipped with some of UC San Diego’s safety applications for city driving. Then earlier this year, the LISA team was at CES itself in Las Vegas, giving attendees a sneak peek of its latest ‘intelligent transportation’ features as part of Qualcomm’s expansive new automotive pavilion at the show.

Qualcomm’s exhibit included a late-model Maserati Quattroporte outfitted with next-generation infotainment and driver-assistance safety features in collaboration with the UC San Diego lab and private technology companies.

“We’ve been working with Qualcomm over the past year, but it wasn’t until late October 2015 that the company broached the possibility of showing our driver-assistance systems two months later at CES,” said LISA Director Mohan Trivedi, a professor of electrical and computer engineering in UC San Diego’s Jacobs School of Engineering. “The biggest challenge was that we would have to redesign our solutions to make them work on the Qualcomm Snapdragon processor, whereas our existing algorithms run on computers installed in the trunk of our test vehicles, because they required so much processing power.”

LISA’s presence at CES underscored the extent to which advanced automotive technologies are rapidly changing what excites consumers. For the past decade, professor Trivedi’s lab has worked on projects for Mercedes, Nissan, Volkswagen, Toyota and Audi, and the UC San Diego lab has become a must-see stop on the itinerary of auto research executives keen to understand how computer vision will reshape driver-assistance systems in future. Indeed, now even non-automakers are forging research partnerships with Trivedi’s group, such as Qualcomm and an incipient relationship with Fujitsu.

To meet the challenge Qualcomm presented to LISA researchers at CES, professor Trivedi, postdoctoral researcher Ravi Satzoda, and M.S. students Frankie Lu and Sean Lee had to come up with new computer-vision algorithms to run on the newly-announced 2.4 GHz Snapdragon 820A processor, which is powerful enough to support real-time object recognition and computer vision for driving assistance. Since safety is at stake in many driver-assistance systems, the algorithms had to be able to run in real time – a minimum of 10 to 15 frames per second for automotive applications – in order to give a timely warning to the driver in the event of a pending collision.

In the end, the effort was successful. It allowed Qualcomm to demonstrate the “UCSD SafeShield” technology on the Maserati model to visitors at CES in January. “Our lab’s effort successfully went from a funded effort into an actual CES demo that showed a novel way of looking at the technology to solve a problem,” noted Trivedi, a longtime faculty participant in Calit2's Qualcomm Institute at UC San Diego. "We were very fortunate to team up with an excellent team of Qualcomm engineers who provided us with the latest hardware and mentoring. Our students learn a lot by working on real-world projects, and in this case special thanks go to Jacobs School alumnus Dr. Mainak Biswas [Ph.D. ‘05] for efficiently coordinating our interactions and his enthusiastic support."

The Maserati concept car included some technologies that are already available commercially, including LIDAR laser scanners for automatic braking to prevent front-end collisions. Ultrasonic and optical sensors monitor the driver’s blind spots to enable safer turns or lane changes. For its part, the UC San Diego team focused on computer vision, for good reason. “Computer vision makes sense because camera-based systems are cheaper than other sensors and more reliable in safety-critical situations,” said electrical and computer engineering postdoc Satzoda. “We know that these processors will be powerful enough to merge camera views across 360 degrees around the vehicle.”

Job #1 was to decide which applications were most likely to work on the embedded platform. “We wanted to show that we could have a certain functionality, such as lane-keeping assist, maybe full-surround shield, maybe things related to parking assistance, as a proof of concept for a future when we would have a car with all sorts of driver assistance powered only by Snapdragons,” explained Trivedi

“We had to come up with algorithms that would run extremely efficiently on those Snapdragon processors,” added Satzoda. “These applications are active-safety systems, so they have to be real time.”

The team experimented with novel algorithms after coming up short with standard ones. “We were getting barely one frame per second, which is far from real time,” said grad student Lu, who expects to graduate with his M.S. degree this June. “But after devising new algorithms, we were able to achieve real-time 10-15 frames per second and an accuracy on the Snapdragon between 96 and 98 percent, which was as good as running on a separate PC for detection accuracy.”

The graduate students first worked on lane detection, “because traditional methods of lane detection weren’t very fast,” added Lu. “Most of the traditional methods were designed to run on desktops, and we had to get them working in real time on the Snapdragon. In the end, we were able to achieve 20-25 frames per second so there was more room to experiment with other applications for the CES demo.”

Realizing for the first time that the Snapdragon would be able to do more than just lane detection, they began working on algorithms for vehicle detection. They were successful by focusing on the cars nearest to the main vehicle, rather than on detecting all vehicles visible on the road (using so-called ‘context-aware processing’).

“We didn’t want to over-stretch our resources,” noted fellow grad student Sean Lee. “That gave us a lot of confidence that we will be able to demonstrate that future Snapdragon boards will eventually allow us to detect traffic lights and signals, and also to detect pedestrians at intersections, an important safety consideration for city driving.”

In most current automobiles with built-in cameras, the latest commercial applications (such as emergency autonomous braking) are based on the feed from a single camera. Most technologies today are only about one camera looking forward, but what the LISA team demonstrated at CES involved two built-in cameras, one looking forward, the other to the rear. “We focused primarily on the lane in which the car was traveling and the lanes to its left and right, where other cars might pose the most imminent hazard,” said Satzoda.

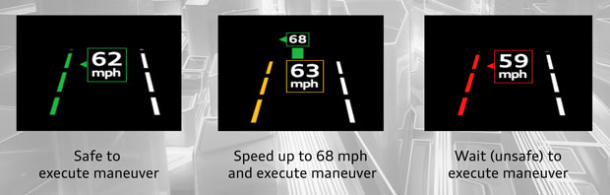

“We look at all three lanes around the vehicle, but with just two cameras, you cannot get full 360-degree coverage, so you have to fill in the blanks,” Trivedi said. “So our dynamic SafeShield technology constantly monitors the free space around the vehicle.” It does so by detecting cars and their velocity with the rear camera to predict when they might be in the blind spots on either side. The SafeShield alerts the driver if a car comes too close in the three lanes surrounding the driver’s vehicle.

“We developed motion-tracking algorithms and vehicle tracking, calculating the velocities of individual cars, after which the tracker takes over,” explained Satzoda. “It extrapolates how cars will progress through the unseen spots.” The system then alerts the driver via the dashboard display, where flashing red in any area near the car would indicate another vehicle is traveling too closely.

The Maserati was part of Qualcomm’s indoor pavilion at CES, so live road tests were out of the question. Instead, the company deployed two display monitors, one mounted on the front windshield, the other in back. On each monitor they displayed a view of the road recorded during actual test drives, and for CES attendees they ran the SafeShield algorithms on Snapdragon to analyze road conditions as if in real time. “It allowed us to demonstrate how the technology works on different conditions, for instance driving on the highway versus on the street, and driving in the evening or in daylight,” said Trivedi.

The algorithms worked, paving the way for much more robust applications. “We can now add more cameras and more Snapdragons,” said Trivedi. “With minor modifications in manufacturing, more powerful Snapdragons can support this entirely new automotive application.”

“Adding two more cameras allows us to see cars in a blind spot,” said Lee. “We are also thinking about more practical applications, such as driver profile analysis, yielding a report on how risky or how improved the driver is. Parents would be able to know how well their teens are driving.”

The LISA team is already testing new algorithms on a Toyota Avalon that has six lasers for full surround, the same number of radars, plenty of cameras, and three computers in the back with their own power supply.

Those computers taking up space in the trunk of a Toyota are just temporary: LISA researchers believe that even very intensive driver-assistance algorithms will one day run on the relatively tiny Snapdragon processors. “This opens up these kinds of computing platforms to machine vision and machine learning-based algorithms,” noted Trivedi. “We’ve shown the promise, and we’d like to add other functionalities that might require more than two cameras.”

The main technology showcase by UC San Diego at CES remains the intellectual property of the university. Trivedi filed a patent application for the SafeShield approach before CES.

Both of the grad students working on the project are hoping to get jobs in a related industry when their complete their dual B.S./M.S. programs this June. Sean Lee wants to work in intelligent vehicles or computer-related robotics, while Frankie Lu is more focused on machine learning and image processing, whether for the automotive sector or another industry.

Meanwhile, professor Trivedi hopes to develop an even closer relationship with San Diego-based Qualcomm now that automotive represents a major growth area for the wireless-communications company. “I think we’ll remain engaged with Qualcomm for a long time,” said Trivedi. "Our experience with SafeShield allows us to explore other active safety-related projects with the company given the natural synergy between our expertise in intelligent systems and theirs with novel communication and computing processing. Qualcomm is a leading designer of really powerful embedded processors and a source of talented R&D professionals, so it’s great to have a partner like Qualcomm in our backyard."

“Visitors at CES, including other automakers, expressed significant interest in the demonstration of UCSD Safety Shield and the whole concept of using lane and vehicle information to generate a threat-analysis matrix that can be useful for driver safety,” said postdoc Ravi Satzoda. “We were happy to showcase our research in the Qualcomm pavilion, and in future, we hope to collaborate on developing platforms for production systems showcasing these technologies.”

Professor Trivedi expects to work further with Audi, but it’s not LISA’s only collaborator that is keen to pursue automotive ‘smart’ safety features. LISA is getting ready to launch a major new partnership with Toyota and its Collaborative Safety Research Center for development of a one-of-a-kind system. Stay tuned…

Related Links

Laboratory for Intelligent and Safe Automobiles

Qualcomm

Motor Trend Article (December 2013)

New York Times Article (January 2011)

IEEE Spark: Meet Mohan Trivedi

Qualcomm Institute

Media Contacts

Doug Ramsey, 858-246-0353, dramsey@ucsd.edu