Larry Smarr Helps NCSA Celebrate 30th Anniversary

September 22, 2016 — The following article is reprinted from the Sept. 20 edition of HPCwire, with permission from HPCwire. The article was written by John Russell, Editor of HPCwire. (To read the original article on HPCwire, click here.)

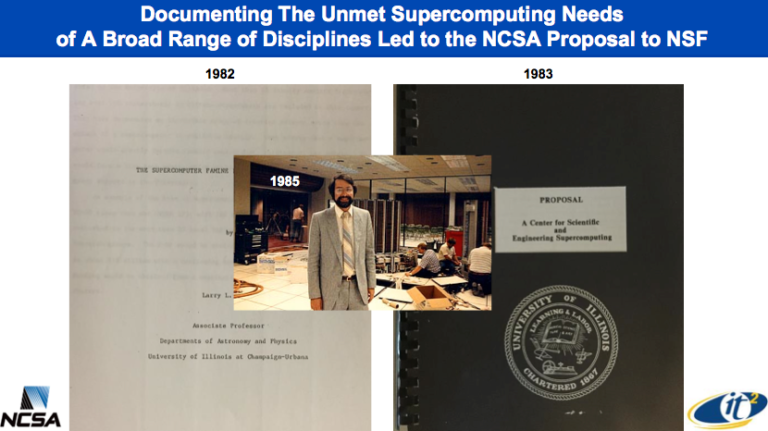

Throughout the past year, the National Center for Supercomputing Applications has been celebrating its 30th anniversary. On Friday, Calit2 director Larry Smarr, whose unsolicited 1983 proposal to the National Science Foundation (NSF) begat NCSA in 1985 and helped spur NSF to create not one but five national centers for supercomputing, gave a celebratory talk at NCSA. In typical fashion, Smarr not only revisited NCSA’s storied past, spreading credit liberally among collaborators, but also glimpsed into scientific supercomputing’s future saying, “This part is on me.”

Many of his themes were familiar but a couple veered off the beaten path – “The human stool” (yes that stool) said Smarr “is the most information-rich material you have ever laid eyes on.” Its enormous data requirements will “dwarf a lot of our physics and astronomy as we really get to precision medicine and that means we are going to need a lot more computer time.” More on this later, replete with metrics and why deciphering the microbiome will require supercomputing.

Here are few of the topics Smarr sailed through:

- NSF Uniqueness.

- Big Data and the Rise of Neuromorphic Computing

- Scientific Visualization.

- Exascale with and without Exotics

- Why the Microbiome is Important.

- Artificial Intelligence is Coming. Soon.

NCSA, based at the University of Illinois, Urbana-Champaign (UIUC), is a U.S. supercomputing treasure. Its current flagship, Blue Waters from Cray, is roughly fifty million times faster than the original Cray X-MP machine that Smarr and his team installed at NCSA’s ambitious start. Even the floor housing the first Cray required $2M in renovations, kicked in by UI. It was a big undertaking to say the least. Since then Blue Waters and its lineage have handled a wide variety of academic and government research, broken new ground in scientific visualization, and promoted industrial collaboration.

Smarr, of course, was NCSA’s first director. Today, he is director of California Institute for Telecommunications and Information Technology (Calit2), a UC San Diego/UC Irvine partnership. An astrophysicist by training, his work spans many disciplines and is currently focused on the microbiome; the common thread is his drive to use of supercomputing to solve important scientific problems. (Currently Bill Gropp is acting NCSA director and Ed Seidel, the current director, has stepped up to serve as interim vice president for research for the University of Illinois System.)

Photos courtesy NCSA

Smarr recalled his “aha moment” that supercomputers should be more widely available and applied in science. He was at UIUC, busily applying computational methods to astrophysics, most famously his effort to solve general relativity equations for colliding black holes using numerical methods, an approach many colleagues thought a fool’s errand. Last year’s LIGO results proved dramatically otherwise. (See HPCwire article, story Gravitational Waves Detected! Historic LIGO Success Strikes Chord with Larry Smarr)

At the time, UIUC had a “VAX 11/780 and the VIP, the “VAX and Image Processing facility, which was about as good as any professors had in the country,” recalled Smarr. He had the chance to go to the Max Planck Institute to work with Karl-Heinz Winkler and Mike Norman and their supercomputer, a Cray 1. “Code that had taken 8 hours on the VAX, overnight – that’s the rate of progress you could make, one 8-hour run a night – I put on the Cray started to go off to lunch.” Before he left the room, the job finished. “I said that’s not possible.” But it was. The Cray 1 was about 400x faster, changing an 8-hour VAX run into a one minute Cray run. “Every ten minutes I could make the same scientific progress that I was making every day. That was the ahaha moment.”

The rest is supercomputing history. Encouraged by Rich Isaacson, NSF’s Division Director for gravitational research, Smarr’s 1983 proposal percolated though NSF culminating with the award in 1985. Perhaps not surprisingly, the Max Planck open-access approach was the model, with Illinois cloning Lawrence Livermore’s machine room. Smarr emphasized many voices and individual efforts were involved in bringing NCSA to fruition. His talk briefly covered supercomputing’s past, present, and future – with many colorful anecdotes. NCSA has posted a video of Smarr’s full talk, which is included at the end of this article.

NSF Matters…and So Does Risk Taking

Early in his talk, Smarr paid tribute to NSF. NCSA and its four siblings represented one of NSF’s big bets. The LIGO program (Laser Interferometer Gravitational-wave Observatory (LIGO) was perhaps the longest and most expensive individual NSF-funded program and also a huge risk. Both are delivering groundbreaking science. Taking on big risk-big reward projects is something NSF can and should do. We probably don’t do enough of them today, he suggested.

He recalled that when Isaacson encouraged him to submit the ‘NCSA’ proposal, Smarr responded, “But there is no program at NSF for this and Isaascon said, at ‘NSF we believe in proposal pressure from the community.’

NCSA switched from specially designed Cray’s to microprocessor based machines from SGI in 1995, another big bet on a new idea. Global demand for microprocessors was growing a whole lot faster than the demand by “the few hundreds of people that bought Crays.” Smarr and NCSA, backed by NSF-funding, bet on microprocessors for the next machine in what he calls a historic shift.

“We’d be about a 10,000 times slower today [if we had not chosen microprocessors]. It is this ability to take risks based on your knowledge of where the technology is going that has made all the difference,” he said. “The NSF is unique in my view in the world in continually working at the outer edge, driven by the best ideas that come out of the user community, and then those breakthroughs are very well coupled back into the corporate world.”

Since today we have smart phones whose processing power far exceeds early supercomputers, there are some who contend NSF’s supercomputer support must be done. Hardly, says Smarr. Rather, “NSF just keeps moving the goal lines exponentially ahead of the consumer market and that is one of the most important things that keeps the United States in its competitive position worldwide.”

I See You – Insight from Sight

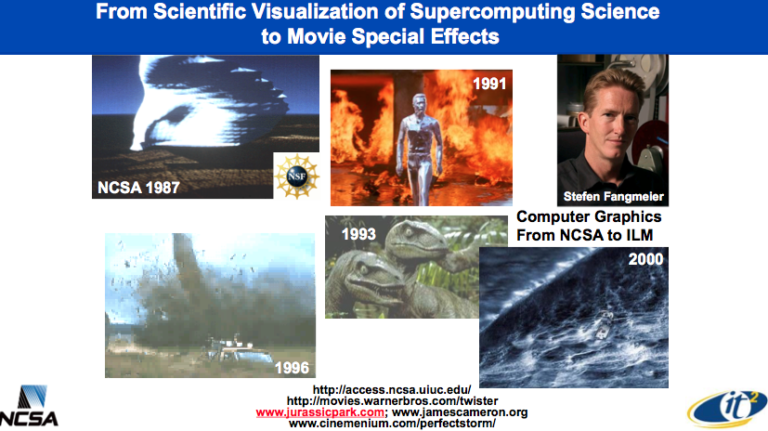

Even at the start of computing, he said, John von Neumann understood the need to make results more readily understandable. “In the early days, when computers were at about a floating point operation a second (FLOPs), von Neumann said they would generate so much data that it would overwhelm the human mind and so we needed to turn the data stream flowing from the computer into a visualization by running the output of the computer into an oscilloscope. So this idea was there from the very beginning, but NCSA took it to a whole another level.”

Scientific visualization has jumped way beyond oscilloscopes. Think 3D immersion CAVE environments, and more, said Smarr citing the NCSA-Caterpillar collaboration. “Caterpillar drove [technology advance] by their investments in NCSA and interest in using virtual reality to create working models in virtual reality of their new earth moving machines before they were built, just out of the CAD/CAM drawing. They were actually worked with us to show how you could have a global set of Caterpillar people working on details like where do we put the fuel tank opening and operator visibility.”

The idea of visualization is not pretty pictures; it’s insight. If you’ve got a computer “doing in those days a few billion 13-digit multiplies a second, which of those numbers do you want to look at to get that understanding? So the idea of scientific visualization was actually an intermediary technology to the human eye-brain system, the best pattern recognition computer yet.”

Of course, that doesn’t preclude pretty pictures that are content rich. Smarr cited NCSA alum, Stefan Fangmeier, who took ideas nurtured at NCSA to Industrial Light & Magic showing that science, not just an artist’s imagination, could be used to convey information: resulting in the computer graphics seen in films such as “Twister, Jurassic Park, Terminator, Perfect Storm, and so forth.”

The staggering growth of data will require ever improving visualization techniques the make insight more readily accessible.

Brain-Inspired Computing Architectures

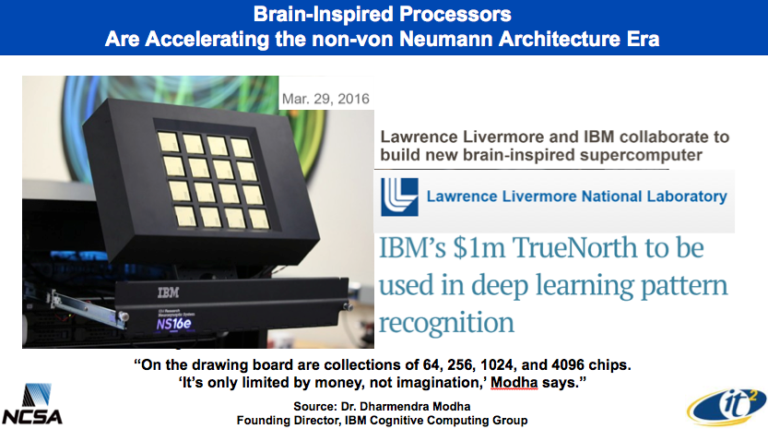

We’ll probably get to exascale computing using traditional architecture, thought Smarr. But to make sense of the tremendous data deluge as well as to progress in deep learning (et al.) better pattern recognition technology will be required. Brain-inspired computing is a new source of inspiration and perhaps further along that many realize. A hybrid computing architecture is likely to emerge, mimicking in a way the so-called human right/left brain dichotomy.

“We are in a moment of transition in which data science and data analysis is becoming as important if not more important than traditional supercomputing,” said Smarr. New approaches beyond today’s cloud computing are needed and brain-inspired co-processors looks prominent among them.

“To research this new paradigm, Calit2 has set up a Pattern Recognition Lab (PRL) to bring this whole new generation of non-von Neumann processors in, put them in the presence of GPUs and Intel multicores to handle the general purpose stuff, then [porting] all the different machine learning algorithms onto them, optimizing them for a very wide set of applications.”

He’s hardly alone in this thinking and cited other suchs as Horst Simon and Jack Dongarra who’ve voiced similar opinions. He singled out IBM’s True North neuromorphic chip, the first non-von Neumann chip in the Calit2 PRL, that put a million neurons and 256 million synapses in silicon, “the most components [on a] chip IBM has ever fabbed.” Lawrence Livermore National Laboratory – “whose supercomputer machine room we cloned, explicitly to make NCSA” –bought a 4X4 array of these neuromorphic chips and is collaborating with IBM to build a brain inspired supercomputer that will be used in deep learning.

Most recently the PRL has added a radical new chip architecture produced by a San Diego startup. Smarr helped to recruit Dan Goldin, the longest serving NASA administrator, to La Jolla, CA over ten years ago to do a startup, (KnuEdge). “This isn’t your typical startup-Dan is now in his mid-70s. But ten years ago Dan spent two years in the Neuroscience Institute to figure out how to put into silicon what they had learned about how the brain learns.” Dan then worked with Calit2 to prototype the first design of a computer board.

In June 2016, KnuEdge came out of stealth with its Hermosa chip. It’s a multilayer “cluster of digital signal processors that don’t have a clock, so it is asynchronous. Their Lambda Fabric is a completely different architecture than what we’re used to working with. That is now in our PRL,” said Smarr.

One of the brain’s advantages everyone is chasing is low power consumption. “Biological evolution has figured out how to get a computer to run a million times more energy efficient than an Exascale will run at and we cannot throw that away kind of advantage. So what I have been saying for 15 years is we’re going to have a new form of computer science and engineering emerge which abstracts out of biologically evolved entities what the principles of organization of those ‘computers’, if you like, are which is totally different than engineered computers.” (See HPCwire article, Think Fast – Is Neuromorphic Computing Set to Leap Forward?)

The Microbiome, Precision Medicine and Computing

Research in recent years has shown how important the microbiome – the population of bacteria in each of us – is to health. If genes and gene products are the key players in physiology, then the numbers tell the microbiome’s story. Inside most people there are around 10x more DNA-bearing bacteria cells than human DNA-bearing cells and 100x more bacteria genes of the microbial DNA than in the human DNA. What’s more the mix of species and their relative proportions inside a persons matter greatly.

Put simply ‘good’ bacteria promote health and help keep bad bacteria in check.

This is the “dark matter” of healthcare, said ex-cosmologist Smarr, and our efforts to understand and use the microbiome “will be completely transformative to medicine over the next five to ten years,” thinks Smarr and others agree. There is even a U.S. Presidential initiative Microbiome Project in addition to the U.S. Precision Health Initiative. Understanding the microbiome and effectively using it will require sequencing and regular monitoring – think time series experiments – of related biomarkers.

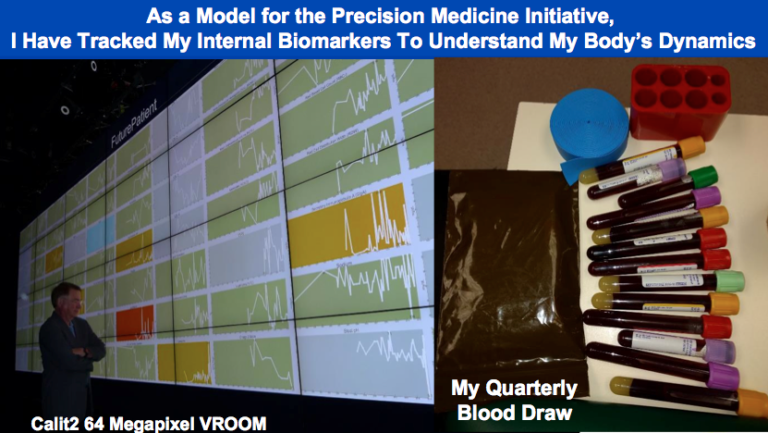

It turns out Smarr has been doing this on himself and discovered he has a gene variant which inclines him to Inflammatory Bowel Disease, which may in the future be treated by “gardening your microbiome’s ecology”. Skipping some of the details, the computational challenge is immense. His team started several years ago with a director’s discretionary grant on Gordon, provided by SDSC director Mike Norman. “Our team used 25 CPU-years to compute comparative gut microbiomes starting from 2.7 trillion DNA bases of my samples along with healthy and IBD subjects.”

He compared this work to his early work in the 1970s on general relativistic black hole dynamics, which took several hundred hours on a CDC 6600 versus the 800,000 or so core hours he, UCSD’s Rob Knight and their team is currently using on San Diego Supercomputing Center’s Comet working on microbiome ecology dynamics. Performing this kind of analysis on a population-wide scale, on an ongoing basis, is a huge compute project. There are 100 million times as many bacteria on earth as all the stars in the universe, noted Smarr, quoting Professor Julian Davies that once the diversity of the microbial world is cataloged, it will make “astronomy look like a pitiful science.”

All netted down, he said “Living creatures are information entities, working their software out in organic chemistry instead of silicon, and that information is your DNA, but it’s both in your human and the microbes’ DNA. When you want to read out the state of that person you need to look at time series of the biomarkers in your blood and stool. If that’s going to be the future and my job has always been to live in the future, then I should turn my body into a biomarker and genomics “observatory” and I started taking blood measurements and stool measurements periodically.

“Your stool by the way doesn’t get much respect – we’ve got to work on our attitude a little because stool is 40 percent microbes and 1 gram of stool contains 1 billion microbes, each of which has a DNA molecule 3-5million bases long. So it’s the most information rich material you have ever laid eyes on.”

You get the idea.

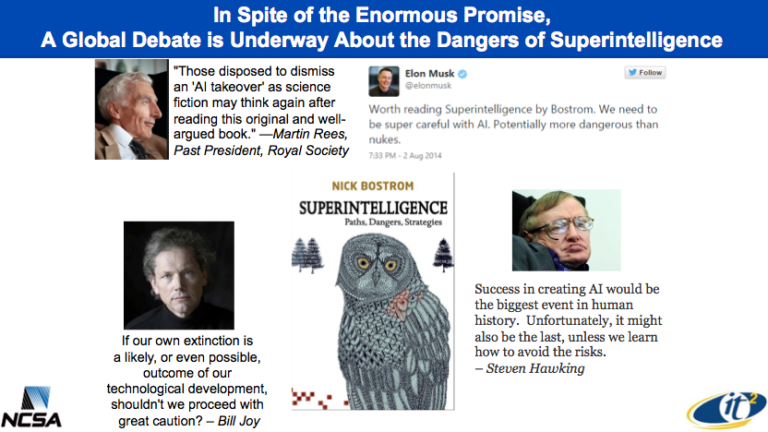

Preparing for Artificial Intelligence

Smarr’s last slide, shown below, contained a set of ominous quotes on the dangers of artificial intelligence from Steven Hawking, Bill Joy, Elon Musk, and Martin Rees – names familiar to most of us and all people whom Smarr knows. He didn’t dwell on the dangers, but directly acknowledged they are real. He spent more time on why he thinks AI is closer than we may realize, how it can be beneficial, and suggested one way to prepare is for NSF to start stimulating thought on AI issues in youth and young scientists.

The technology itself is advancing on many fronts, whether running machine learning on traditional CPU/GPUs or emerging neuromorphic (Smarr didn’t discuss quantum computing in his talk). He noted that LBNL’s Deputy Director Horst Simon predicts that in the 2020-2025 timeframe, an exascale supercomputer will be able to run a simulation of 100% of the scale of the human brain in real time. “It will be effectively as fast as a human brain,” said Smarr. What that means in terms of applications and AI precisely remains unclear. But the technology will get us there.

Today, everyone’s favorite example of that state of machine learning as a surrogate for AI seems to be Google DeepMind system’s recent victory over Lee Sedol of Korea, one of the world’s best Go champions this spring.

“Google took 30 million moves of the best Go masters on the planet and fed those in as training sets. That [alone] would have made a computer hold its own against top Go players. But then Google’s team ran the trained AI against itself for millions of times coming up with moves of Go that no human had ever conceived of,” said Smarr, “So in less than two years from when Wired magazine ran a story titled ‘Go, the Ancient Game That Computers Still Can’t Win,’ Google [won].”

“Then Google takes that incredible software, what a treasure trove, and makes it open source and gives it to the world community in TensorFlow. We are using this every day at Calit2 to program these new chips (KnuEdge).” A research effort Smarr cited, being led by Jeremy Howard, is attempting to teach machines to read medical xrays as well as ‘the best doctor in the world “using TensorFlow. Howard says basically instead of programming the computer to do something, you give it a few examples and let it figure out how to do that. That’s the new paradigm.”

In fact, there are many aggressive efforts to develop the new paradigm and many of those efforts involve corporate IT giants advancing AI for their own purposes and putting their technology into the hands of academia for further development, pointed out Smarr. IBM is “betting the farm on Watson”. All of the new systems will not merely be powerful but hooked into vast databases.

For a feel of where this is going, consider the movie Her. “All of you should see it if you want to experience one of the best examples of speculative fiction painting a picture of where this process is taking us, where we all have individualized personalized AI agents, who learn more and more about you the more you interact with the [system]. And they are working with everybody across the planet simultaneously,” said Smarr.

Sounds very Big Brother-ish, and it could be agrees Smarr. However he remains optimistic. Like many of his generation, he grew up reading science fiction including Isaac Asimov’s many robot-themed works.

“Asimov had the three laws to protect the robots from doing harm to humans. We’ll get through this AI transition I believe, but only if everybody realizes this is a one of the most important change moments in human history, and it isn’t going to be happening 100 years from now, but rather it’s going to be in the next five, 10, to 20 years. One of the things I am hoping is NSF will be funding a lot of this research into the universities and to young people where they can start imagining these futures, playing with these new technologies, and helping us avoid some of the risks that these four of the smartest people on the planet are talking about here. My guess is that NCSA and the University of Illinois at Urbana-Campaign will be leaders in that effort,” concluded Smarr.

Related Links

HPCwire article by Editor John Russell

NCSA video of Larry Smarr's talk

Larry Smarr's slides

Smarr's blog

Media Contacts

Doug Ramsey, (858) 822-5825, dramsey@ucsd.edu