Mike Bailey and Students Demonstrate Interactive Graphics in Dome Environment

|

3.22.04 - In a presentation recently to trustees of the Reuben H. Fleet Science Center, San Diego Supercomputer Center graphics expert Mike Bailey and two of his UCSD students, Nick Gebbie and Matt Clothier, demonstrated interactive graphics in the Fleet Center's domed theater. The theater is a 76-foot-diameter tilted dome - one of the world's largest and most successful public visualization systems in support of popular science education.

What's significant about this work is that it addresses three fundamental needs: the ability to present large - and potentially topical - datasets, interactively, using inexpensive, commodity graphics platforms.

"The IMAX Dome is a marvelous immersive medium," says Jeff Kirsch, director of the Fleet Center. "But it's too expensive and cumbersome to present science-in-progress material to the public. To do that, we need a digital system with sufficient resolution and economic utility to greatly upgrade what we provide the general public without taxing our already strained budgets. The audience, some initially skeptical, were all convinced that partnering with SDSC puts us on the right track."

According to Bailey, two technical capabilities have combined to make this work possible. First, equipment manufacturers developed "monster" fisheye lenses for video projectors, which enable projecting a 225-degree field of vision. (The actual field of vision presented is 165 degrees due to collaring around the lens.) This is broader than a person's peripheral vision and thereby creates a sense of being immersed in the imagery. Second, graphics card vendors have made it possible for end users to write and embed their own code in the cards.

"This capability," says Bailey, "was clearly implemented for game developers. I've been following the features developed for that community for some time to see what I could do with them."

|

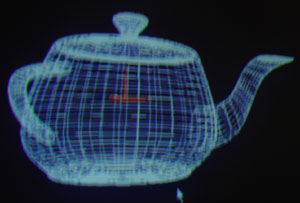

Bailey modified vendor-supplied OpenGL application-program interface software to present graphics in a dome environment. He loaded it into a commodity PC graphics card to achieve interactive graphics speeds for some capabilities that usually require significant amounts of processing when performed in a computer's main CPU.

It's well known in the computer graphics field that fisheye lenses distort images," says Bailey. "So we applied what you might call an inverse distortion, that is, distortion in the other direction, to pre-correct for the eventual lens distortion. The equations are extremely non-linear and thus can't be handled by a graphics card's standard functionality."

With the help of Ed Lantz at Spitz, Inc., creators of ElectricSky II, the team borrowed a JVC DLA-QXIG (2048 x 1536 pixels) ultra-high resolution projection system with a custom Spitz lens and linked it with a PC borrowed from Bailey's lab for a few days of testing in the Fleet Space Theater. Gebbie used Bailey's pre-distortion code to present his work on terrain visualization in the immersive environment. Clothier wrote a program to stitch together 360 degrees of images to present composite panoramic images in the environment.

Gebbie and Clothier are graduate students in UCSD Computer Science and Engineering department and have received funding from Calit². Bailey is director of visualization at SDSC, an adjunct professor at UCSD, and a member of the Interfaces and Software Systems layer at Calit².

Bailey and Kirsch plan to write a proposal to the National Science Foundation to develop what they call agile content. A typical IMAX film consumes 13,000 to 18,000 feet of film - about three miles' worth. And therein lies the problem: IMAX films are enormously expensive and time-consuming to produce and so are not adaptive enough to present emerging topics in math and science. "We wanted a more agile and inexpensive approach to science outreach and education," says Bailey, " and that led us to consider graphics PCs."

|

Bailey's team has potentially reduced what might otherwise be a three-year development period for an IMAX movie to as little as a few weeks. "And," Bailey is quick to emphasize, "we can use this as a way to take advantage of the student talent we have at UCSD."

This system could be used to deliver real-time data in three dimensions to an audience and allow them to interact with it. Bailey says his team could take nearly any data, regardless of location or format, such as from Calit2 sensor nets, and create an interactive demo in a couple of weeks using college students who know OpenGL.

"In fact," he says, "I can even imagine a collaborative dome-to-dome environment, supported by OptIPuter optical networking, that would enable two groups of students in different locations to interact with each other's data. And that's only one possibility."