UCSD Researchers Modify Kinect Gaming Device to Scan in 3D

By Chris Palmer

San Diego, Calif., July 28, 2011 -- University of California, San Diego students preparing for a future archaeological dig in Jordan will likely pack a Microsoft Kinect, but it won’t be used for post-dig, all-night gaming marathons. Instead, the students will use a modified version of the peripheral Xbox 360 device in the field to take high-quality, low-cost 3D scans of dig sites.

|

Jürgen Schulze, a research scientist in the UCSD division of the California Institute for Telecommunications and Information Technology (Calit2), along with his Master’s student Daniel Tenedorio, have figured out a way to extract data streaming from the Kinect’s onboard color camera and infrared sensor to make hand-held 3D scans of small objects and people.

Currently, the researchers can use scans of people made with the modified Kinect to produce cheap, quickly made avatars that could conceivably be plugged right into virtual worlds such as Second Life.

Schulze's ultimate goal, however, is to extend the technology to scan entire buildings and even neighborhoods. For the initial field application of their modified Kinect – dubbed ArKinect (a mashup of archaeology and Kinect) – Schulze plans to train engineering and archaeology students to use the device to collect data on a future expedition to Jordan led by Thomas Levy, associate director of the Center of Interdisciplinary Science for Art, Architecture, and Archaeology (CISA3).

“We are hoping that by using the Kinect we can create a mobile scanning system that is accurate enough to get fairly realistic 3D models of ancient excavation sites,” says Schulze, whose lab specializes in developing 3D visualization technology.

The scans collected at sites in Jordan or elsewhere can later be made into 3D models and projected in Calit2’s StarCAVE, a 360-degree, 16-panel immersive virtual reality environment that enables researchers to interact with virtual renderings of objects and environments. Three-dimensional models of artifacts provide more information than 2D photographs about the symmetry (and hence quality of craftsmanship, for example) of found artifacts, and 3D models of the dig sites can help archaeologists keep track of the exact locations where artifacts were located.

|

As of now, the modified Kinect system relies on an overhead video tracking system, limiting its range to relatively small indoor spaces. However, Schulze notes, “In the future, we would like to make this device independent of the tracking system, which would allow us to take the system outside into the field, where we could scan arbitrarily large environments.

We can then use the 3D model, walk around it, we can move it around, we can look at it from all sides.”

“There may be experts off site that have access to a CAVE system,” he adds, “and they could collaborate remotely with researchers in the field. This technology could also potentially be used in a disaster site, like an earthquake, where the scene can be digitized and viewed remotely to help direct search and rescue operations.”

Schulze adds that it may even be possible to simulcast live reconstructions in the StarCAVE of 3D scans of objects or scenes taken in the field by the Kinect with a standard 3G or wireless broadband connection.

From the Living Room to the Lab

Since its release last November, the Kinect -- now the fastest-selling consumer electronics device of all time -- has captured the imagination of hundreds of thousands of videogame enthusiasts as well as many university researchers, who have modified the device for use in projects ranging from robotic telesurgery to navigation systems for the blind.

Originally intended to sit atop a television and sense the movements of users playing videogames, the Kinect was repurposed by Tenedorio to capture 3D maps of stationary objects. The scanning process, which entails moving the device by hand over all surfaces of an object, looks somewhat like having a metal-detecting wand waved over one’s body at the airport (if it were done rather slowly by an overzealous TSA agent).

|

Schulze likens the procedure to using a can of spray paint: “Imagine you wanted to spray paint an entire person. To do a complete job, you would have to point the can at every surface, under the arms, between the fingers, and so on. Scanning a person with the Kinect works the same way.”

The ability to operate the Kinect freehand is a huge advantage over other scanning systems like LIDAR (light detecting and ranging), which creates a more accurate scan but has to be kept stationary in order to be precisely aimed.

“Having a hand-held device is important for these excavation sites where the ground is rugged and uneven,” explains Schulze. “With the Kinect, that doesn’t slow you down at all.”

How it works

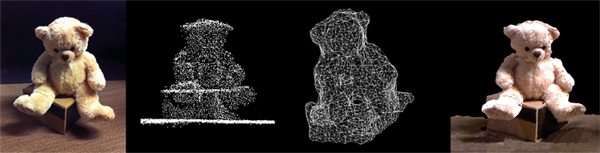

The Kinect projects a pattern of infrared dots (invisible to the human eye) onto an object, which then reflect off the object and get captured by the device’s infrared sensor. The reflected dots create a 3D depth map. Nearby dots are linked together to create a triangular mesh grid of the object. The surface of each triangle in the grid is then filled in with texture and color information from the Kinect’s color camera. A scan is taken 10 times per second and data from thousands of scans are combined in real-time, yielding a 3D model of the original object or person.

One challenge Schulze and his team faced was spatially aligning all the scans. Because the ArKinect scans are done freehand, each scan is taken at a slightly different position and orientation. Without a mechanism for spatially aligning the scans, the 3D model created would be a discontinuous, Picasso-esque jumble of images.

|

To overcome this challenge, Tenedorio outfitted the ArKinect with a five-pronged infrared sensor attached to its top surface. The overhead video cameras track this sensor in space, thereby tagging each of the ArKinect’s scans with its exact position and orientation. This tracking makes it possible to seamlessly stitch together information from the scans, resulting in a stable 3D image.

The team is working on a tracking algorithm that incorporates smartphone sensors, such as an accelerometer, a gyroscope, and GPS (global positioning system). In combination with the existing approach for stitching scan data together, the tracking algorithm would eliminate the need to acquire position and orientation information from the overhead tracking cameras.

|

A major advantage of the ArKinect is that scan progress can be assessed on a computer monitor in real time. Notes Schulze, “You can see right away what you are scanning. That allows you to find holes so that when there is occlusion, you can just move the Kinect over it and fill it in.”

This is in contrast to conventional scanning devices, where data is collected and then analyzed offline -- often in a separate location -- which can be problematic if any holes are present.

Deleting unnecessary data, in fact, turned out to be the research team’s most daunting challenge. “Simply adding each frame into a global model adds too much data; within seconds, the computer cannot render the model and the system breaks,” says Tenedorio, who is joining Google as a software engineer following completion of his master’s degree in Computer Science and Engineering this July.

“One of the primary thrusts of our research is to discover how to throw away duplicate points in the model and retain only the unique ones.”

The Kinect streams data at 40 megabytes per second – enough to fill an entire DVD every two minutes. Keeping the amount of stored data to a minimum will allow a scan of a person to occupy only a few hundred kilobytes of storage, about the same as a picture taken with a digital camera.

Another advantage of the Kinect is cost: It retails for $150. This low price tag, coupled with Schulze’s efforts to make it a portable self-contained, battery-powered instrument with an onboard screen to monitor scan progress, makes it feasible to send an ArKinect with Levy’s students to Jordan.

Schulze’s team is currently writing a paper about the ArKinect project to submit to a major international virtual reality conference.

Other students (all from UCSD’s Computer Science and Engineering department) involved in the project include master’s students Marlena Fecho and Jorge Schwarzhaupt, who helped develop the ArKinect’s scanning algorithm, and master’s student James Lue and undergraduate student Robert Pardridge, who helped with the 3D meshing program that creates surfaces from points extracted from the ArKinect’s depth map.

Media Contacts

Tiffany Fox, (858) 246-0353, tfox@ucsd.edu