Software System Labels Coral Reef Images in Record Time

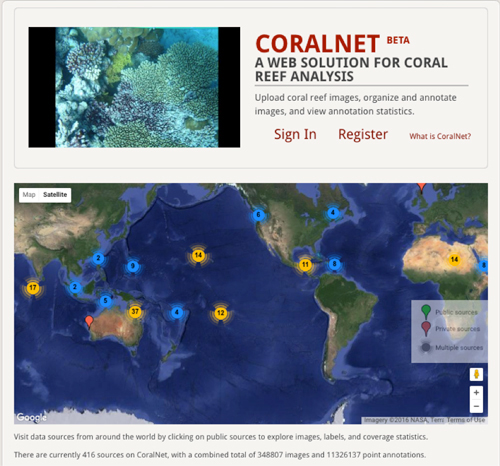

San Diego, January 11, 2016 — Computer scientists at UC San Diego have released a new version of a software system that processes images from the world’s coral reefs anywhere between 10 to 100 times faster than processing the data by hand.

This is possible because the new version of the system, dubbed CoralNet Beta, includes deep learning technology, which uses vast networks of artificial neurons to learn to interpret image content and to process data.

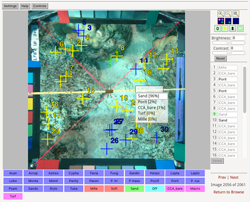

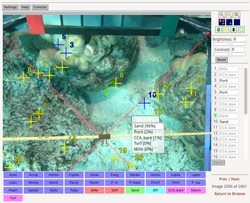

CoralNet Beta cuts down the time needed to go through a typical 1200-image diver survey of the ocean’s floor from 10 weeks to just one week—with the same amount of accuracy. Coral ecologists and government organizations, such as the National Oceanographic and Atmospheric Administration, also use CoralNet to automatically process images from autonomous underwater vehicles. The system allows researchers to label different types of coral and whether they’ve been bleached, different types of invertebrates, different types of algae—and more. In all, over 2200 labels are available on the site.

“This will allow researchers to better understand the changes and degradation happening in coral reefs,” said David Kriegman, a computer science professor at the Jacobs School of Engineering at UC San Diego and one of the project’s advisers.

The Beta version of the system runs on a deep neural network with more than 147 million neural connections. “We expect users to see a very significant improvement in automated annotation performance compared to the previous version, allowing more images to be annotated quicker—meaning more time for field deployment and higher-level data analysis,” said CoralNet founder and project manager Oscar Beijbom, a CSE alumnus (Ph.D. '15) whose June 2015 dissertation was on "Automated Annotation of Coral Reef Survey Images."

He created CoralNet Alpha in 2012 to help label images gathered by oceanographers around the world. Since then, more than 500 users, from research groups, to nonprofits, to government organizations, have uploaded more than 350,000 survey images to the system. Researchers used CoralNet Alpha to label more than five million data points across these images using a tool to label random points within an image designed by UC San Diego alumnus Stephen Chen, the project’s lead developer.

“Over time, news of the site spread by word of mouth, and suddenly it was used all over the world,” said Beijbom.

Other updates in the Beta version include an improved user interface, web security and scalable hosting at Amazon Web Services.

CoralNet was originally developed as part of the Computer Vision Coral Ecology Project funded by the National Science Foundation. NOAA funded the Beta version.

Media Contacts

Ioana Patringenaru, (858) 822-0899, ipatrin@ucsd.edu

Related Links