Life, the Universe and Everything

Science Frankfurter Allgemeine Sunday Newspaper, 7/13/2003, Nr. 28, S.55

Life, the Universe and Everything

Visiting with Larry Smarr, the prophet of the "Planetary Computer"

|

BY ULF VON RAUCHHAUPT

(English translation by Stephanie and Ingolf Krueger; to view a pdf file of the original article in German, click here)

SAN DIEGO - There was no more room in the nifty "Supercomputer Center" building, every corner already being jam-packed with closets full of Terabytes and Gigaflops. Thus, the "OptIPuter" is located a few hundred meters away, in a maintenance building of the University of California (UCSD) campus. A brown steel sheet facade, no windows, and an entrance more likely to lead to a bicycle cellar than to the nucleus of the information revolution's next phase.

For the OptIPuter is one of two test facilities for the networking of supercomputers via fiber optic cables. Eventually, with its help, a network shall emerge against which today's Internet will compare like a trekking path against a highway - even more: an intelligent highway, autonomously adding and removing lanes, depending on traffic conditions. On top of that, soon this network will connect not only full-blown computers, but ultimately everything capable of processing, storing, capturing, or displaying data: phones, cameras, temperature sensors, pace makers, simply everything.

This sounds utopian, but compared to some other dreams of the future, this one can at least count on the known laws of nature - and, after all, there is something to see already in the university boiler-room in San Diego. Even if it is only a few cubic meters of electronics, hidden behind aluminum panels with small blinking lights and a plethora of thin, yellow cables. A weird, bare site, inflicting a very Californian symbolism on its visitor. Wasn't the first PC, the nucleus of the technology that revolutionized both our personal and our work lives barely a decade ago, soldered together in a garage?

A soldering gun is nowhere to be found here, however. Also, the father of the OptIPuter is anything but a garage tinkerer. Actually, Larry Smarr is not a technician at all - although he has been holding a position as professor at UCSD's Jacobs School of Engineering for three years. Smarr, being still somewhat formal for a Californian, also has little in common with the protagonists of former stages of the information revolution - despite all his visionary eloquence. He bears little resemblance to entrepreneurial engineers such as Carver Mead or Gordon Moore, who conceived techniques for miniaturizing electronic circuits in the sixties - these techniques have since ignited exponential growth of computer performance (a phenomenon known as Moore's Law) - and even less so to brilliant college dropouts such as Bill Gates or Steve Jobs, who used Moore's Law to turn the computer into a commodity.

The Midwest, his origin, is not the only reason for this. Larry Smarr, born in 1948 and thus seven years older than Gates and Jobs, encountered computing as a mere "user," as someone just trying to apply the machine. In relativistic astrophysics, his original domain, many objects of research, black holes and supernovae explosions, can be studied in detail only through computer simulations - a very compute-intensive business, which, back in the Eighties, could be asked of only the best supercomputers. These, however, were not available for non-military basic research in adequate amounts at the time. "Here I was, having to go to Germany, to the Max-Planck Institute for Astrophysics in Garching, to be able to use a CRAY supercomputer - built in America - there." remembers Smarr, "and I thought: Something is wrong here!" Back at home he convinced the National Science Foundation (NSF) in 1983 to install national supercomputer centers. Two years later, with Larry Smarr as its director, the National Center for Supercomputing Applications (NCSA) opened its doors in Urbana, next to Chicago.

Being a - former, now - user of supercomputers, Smarr quickly realized that mere provisioning of computing power in a few centers was inadequate for the then already largely globalized basic research trade. It is equally important to be able to send data and programs quickly over continental and intercontinental distances. The technology for this existed already: In 1983 the ARPAnet, the Internet's predecessor, had been converted into modern software called TCP/IP, enabling unproblematic data exchange among various American computer networks. Alone, the transmission capacity, the so-called bandwidth, was nowhere near enough for the researchers. Larry Smarr approached NSF again, resulting in the NSF-Net in 1986, a powerful so-called backbone network, which laid the foundation for what we call the Internet today.

Despite such measures and the "data highway" rhetoric of many research politicians, the transmission capacity never caught up with the rapidly growing demand for bandwidth. In the PC segment alone, every three years a new generation of computers hit the market - and each time the volume of data to be transmitted grew larger and larger. Says Larry Smarr: "In the past, the lack of bandwidth always used to be the bottleneck of computer technology."

This bottleneck is not only a nuisance, it downright obstructs progress - not only that of data traffic, but of computing as such. Already now in many places trials are underway with a technology that might allow the computer boom to outlive the end of increased processor efficiency foreseen even by Gordon Moore for the next few years. We are talking about so-called grid computing, where many computers, often of different models and operating under different operating systems, jointly execute a computation. Research institutes with extreme demands for computing capacity, such as CERN, the European Center for High-energy Physics in Geneva, increasingly rely on this technique: Instead of buying tailored supercomputers, one connects many small conventional computers. In principle this also allows connecting computers from various locations on the globe over the Internet to yield a virtual computer.

|

The user of a grid system doesn't even need to know where his program is currently being executed, and where the intermediate results are being stored. Experts predict a bright future for this "distributed computing;" the only reason for its not really having caught on yet is that the Internet, despite all backbones, still cannot handle the resulting enormous amounts of data. To change this picture, Larry Smarr left his position with NCSA and came to California.

Gray Davis, the governor of the - actually almost bankrupt - home of the digital revolution , together with the American federal government and numerous industrial partners, has forked out half a billion dollars there for a brand new institute, some years ago. Tied to two locations of the University of California, at San Diego and Irvine, and interwoven with a plethora of other private and public research sites, this is supposed to be an institute of a very new kind. The many partners involved are possibly also the reason for the almost Byzantine name: "California Institute for Telecommunications and Information Technology." After all, with its short form Calit² - pronounced "Käl-ei- ti-squär" - it is at the forefront of the current trend towards logos with a mathematical look.

Directed by Smarr, Calit² is devoted to data transport of the future, to two aspects of it, that is: First, what must the Internet backbones - i.e. the arterial roads - of the future look like to realize the dream of global grid computing? Second, how can wireless devices be integrated into the worldwide data network?

Larry Smarr's answer to the first question is the optical-fiber based OptIPuter. Thanks to the enormous advances of optical-fiber technology, increasingly large volumes of data can be funneled through such wires. The increases in data volume are, by now, faster than the performance increases of the computers themselves. "Moore's Law says that the number of transistors we pack into a microprocessor doubles every 18 months," says Greg Hidley, one of Smarr's employees. "Storage capacity even doubles every 12 months. If you look at the capacities of fiber-optic networks, however, their bandwidth doubles every nine months." Still, a lot of development work is necessary to tap into the exploding potential of fiber-optic networks, to revolutionize worldwide data transfer.

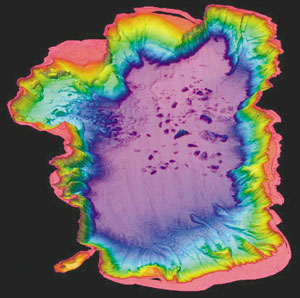

San Diego certainly today provides a foretaste of the global fiber-optic grid. Not far from UCSD's campus on the Pacific coastline is the Scripps Institution of Oceanography, one of the country's leading geo-scientific research institutes. There, in collaboration with Calit², a so-called visualization center was established - a kind of 3-D movie theatre where geologists and oceanographers can literally become immersed in their subject: A computer simulation, fed with data from surveillance satellites, test drills, and seismometer stations, lets the rock layers of an earthquake region emerge as a high-resolution panorama in three dimensions; controlled by a Joystick. Researchers can move through this panorama as if they were flying right through the rock formation. This shall enable the researchers gathered in front of the screen to discuss the data and their interpretation.

At San Diego State University, at the other end of town, there is a second such visualization center allowing simultaneous participation in the movie theatre at Scripps. The two centers are linked via a bunch of high performance fiber-optic wires that enable transmission of 2.5 Gigabits per second back and forth - enough to transmit the entire computer simulation in real-time and fully interactively. An Internet on the basis of the OptIPuter could ship such masses of data between two arbitrary Internet sockets anywhere in the world - provided that the computers connected are powerful enough. "Soon the network will no longer be the bottleneck," predicts Larry Smarr: "The processors form the bottleneck."

Yet, for Smarr and his Calit² colleagues, fiber-optic networks are only one pillar of the future Internet. They will build an infrastructure connecting many local networks - these networks, however, will be largely wireless.

Just like the fiber-optic wire, digital radio networks are not a new technology as such. Meanwhile, especially around Sorrento Valley to the north of San Diego, so many cellular phone network providers have gathered that some already refer to this area as "Wireless Valley." The communications giant QUALCOMM, after which a San Diego sports stadium is named, has as big a presence there as Martin Cooper's company; in 1973, Cooper, then still a Motorola engineer, presented the first truly mobile phone - a brick-shaped monster weighing 800 grams.

Larry Smarr is thoroughly convinced that only after establishing the relationship between high-performance Internet, grid computing, and wireless technology will the information revolution gain full momentum. He sees us being approached by comprehensive networking - eventually including all the many commodities with embedded digital controls. That's because they all basically are computers and thus potentially subject to networking. "Today the Internet connects roughly 200 million PCs - but within five years add a billion cellular phones, always connected to the Internet. They become part of a planetary computer that constantly grows and grows." "Ubiquitous computing" emerges from "distributed computing." The myriads of networked processors will assemble to form a gigantic potential of computing power, all the networked cameras and sensors will assemble to form an enormous data pool containing information about our planet and what happens on it. And all this will be accessible anytime, anywhere - from any cell phone this access will be possible; "The entire world will be wired in a certain sense. And this will change our lives, forever."

These changes, however, will have little in common with frequently conjured dark visions of totalitarian Big Brother states, believes Smarr. Man's tendency to form socially sanctioned codices of behavior will counter the dangers of abuse - comparable to the rule of conduct stating not to read letters addressed to others. Instead these changes will enrich people where they deem it necessary and reasonable. Of course, Larry Smarr is optimistic about technology by trade - but maybe also therefore someone who refuses to let his visions run wild beyond his own expertise.

Others, such as the cellular-technology pioneer Martin Cooper, are more inclined to speculate: "If we really build the network Larry talks about, then we have a computer that is infinitely more intelligent than we are." says Cooper, who immediately comes up with a joke: "Some day, when not only all computers on our planet are connected, but also all computers on all inhabited planets, somebody will have the honor to turn the switch, and to ask the first question to this absolute computer. And so they turn the switch and ask 'Does God exist?' Suddenly heaven breaks open, a lightning bolt strikes the earth, and a voice shouts 'As of now.'"

Reference: "Total vernetzt. Szenarien einer informatisierten Welt" von Friedemann Mattern (Hrsg.), Springer, Heidelberg 2003

Media Contacts