Machine Learning Enhances Non-verbal Communication in Online Classrooms

By Doug Ramsey

Monday, June 21, 2021 — Researchers in the Center for Research on Entertainment and Learning (CREL) at the University of California San Diego have developed a system to analyze and track eye movements to enhance teaching in tomorrow’s virtual classrooms – and perhaps future virtual concert halls as well.

UC San Diego music and computer science professor Shlomo Dubnov, an expert on computer music who directs the Qualcomm Institute-based CREL, began developing the new tool to deal with a downside of teaching music over Zoom during the COVID-19 pandemic.

“In a music classroom, non-verbal communication such as facial affect and body gestures is critical to keep students on task, coordinate musical flow and communicate improvisational ideas,” said Dubnov. “Unfortunately, this non-verbal aspect of teaching and learning is dramatically hampered in the virtual classroom where you don’t inhabit the same physical space.”

To overcome the problem, Dubnov and Ph.D. student Ross Greer recently published a conference paper* on a system that uses eye tracking and machine learning to allow an educator to make ‘eye contact’ with individual students or performers in disparate locations – and lets each student know when he or she is the focus of the teacher’s attention.

The researchers built a prototype system and undertook a pilot study in a virtual music class at UC San Diego via Zoom.

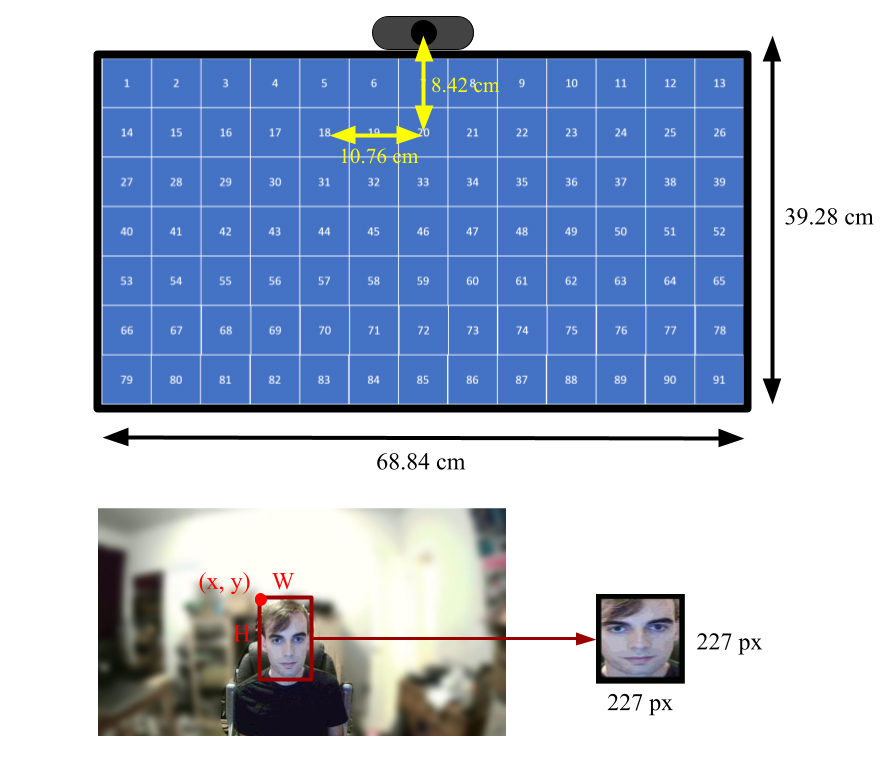

“Our system uses a camera to capture the presenter’s eye movements to track where they are looking on screen,” explained Greer, an electrical and computer engineering Ph.D. student in UC San Diego’s Jacobs School of Engineering. “We divided the screen into 91 squares, and after determing the location of the teacher’s face and eyes, we came up with a ‘gaze-estimation’ algorithmm that provides the best estimate of which box – and therefore which student – the teacher is looking at.”

As the system recognizes a change in where the teacher is looking, the algorithm determines the identity of the student and tags his or her name on screen so everyone knows whom the presenter is focusing on.

In the pilot study, Dubnov and Greer found the system to be highly accurate in estimating the presenter’s gaze – managing to get within three-quarters of an inch (2cm) of the correct point on a 27.5 x 13 inches (70x39cm) screen. “In principle,” Greer told New Scientist magazine, “the system should work well on small screens, given enough quality data.”

One downside, according to Dubnov: the further the presenter is from the camera, the eyes become smaller and harder to track, resulting in less accurate gaze estimation. Yet with better training data, higher-quality camera resolution, and further advances in tracking facial and body gestures, he thinks the system could even allow the conductor to wield a baton remotely and conduct a distributed symphony orchestra -- even if every musician is located somewhere else.

* Ross Greer and Shlomo Dubnov, Eye-Tracking Software Could Make Video Calls Fee More Lifelike, Proceedings of CSME (2021), ISBN: 978-989-758-502-9 DOI: 10.5220/0010539806980708

Media Contacts

Doug Ramsey

dramsey@ucsd.edu

Related Links