Novel Multimodal Computer Vision Techniques Promise Improved Recognition and Tracking of Human Activity

San Diego, CA, January 10, 2007 -- Computer vision researchers at the University of California, San Diego have developed and demonstrated new techniques to improve recognition of human activity by using cameras that operate at different wavelengths than those used in human vision. The algorithms could be of use in applications ranging from surveillance, automotive safety, smart spaces and human-computer interfaces.

|

"The new systems we are developing are multi-perspective and multimodal," said Mohan Trivedi, professor of electrical and computer engineering in UCSD's Jacobs School of Engineering. "They allow observation of a space and occupants from various viewpoints and sense reflected as well as emitted energies. The objective is to observe and understand human movements and activities in a robust manner, and the results have been very encouraging,"

The multi-perspective approach involves two or more cameras observing the same person from different angles. Multimodal means more than one type of camera - e.g., thermal infrared, and color.

Recent results of Trivedi's research are recounted in two new papers co-authored with researchers in his Computer Vision and Robotics Research (CVRR) laboratory, an affiliate of Calit2 on the UCSD campus. They are published in the latest edition of the journal Computer Vision and Image Understanding , in a special issue devoted to "Vision Beyond Visible Spectrum".

"To have two full papers from the same lab in one special issue is indeed a very nice recognition for UCSD," said Trivedi. "The research involving multiple modalities of sensing that we initiated more than five years ago is yielding a lot of useful advances, including these two papers that deal with new algorithms and analysis using thermal infrared video along with color video."

|

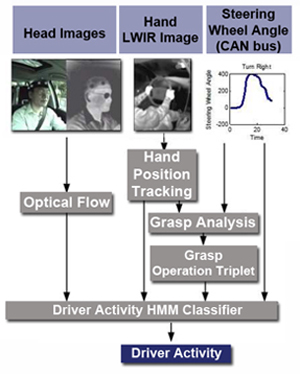

In "Multi-spectral and Multi-Perspective Video Arrays for Driver Body Tracking"1, authors Shinko Cheng, Sangho Park and Trivedi present a novel approach to recognizing what a motorist does while driving. "It is a major challenge to track and analyze a person's movements," explains Cheng. "This is especially true in unconstrained environments where the lighting is unreliable and where there is 'noise' in the environment because so much activity may be going on, in this case, inside and outside the vehicle."

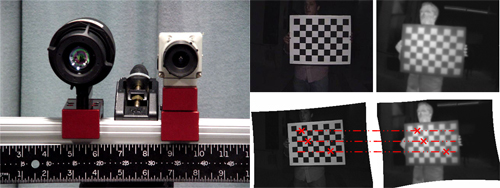

Cheng and his fellow researchers in the smart-car lab developed a system consisting of four separate cameras and views (multi-perspective) and both thermal infrared and color cameras (multimodal). The equipment was installed on the LISA-Q, an Infiniti Q45 bedecked with cameras, sensors and processors. The vehicle has been used in a number of automotive computer-vision experiments to date. The video-based system was then tested on the road to see how well it did with "robust and real-time" tracking of the driver - specifically, of the driver's important body parts (head, arms, torso, and legs).

"The multi-perspective characteristics of the system provide redundant trajectories of the body parts, while the multimodal characteristics of the system provides robustness and reliability of feature detection and tracking," report the authors. "The combination of a deterministic activity grammar (called 'operation triplet') and a Hidden Markov model-based classifier provides semantic-level analysis of human activity."

The bottom line: experimental results in real-world street driving demonstrated the proposed system's effectiveness, including the tracking of the driver's head and hands regardless of the level of illumination, and fairly accurate tracking performance in noisy outdoor driving situations.

|

Explained Ph.D. student Steve Krotosky: "This can lead to robust and accurate pedestrian detection, tracking and analysis for active safety systems in a vehicle, and also for operating surveillance systems on a 24/7 basis."

|

The research was mainly supported by the CVRR lab's grant from the Technical Support Working Group (TSWG), a federal, inter-agency institution responsible for overseeing technology development to help in the fight against terrorism. TSWG also supported the Eagle Eyes system developed by the UCSD researchers, which is used by the Police Department in Eagle Pass, TX. (See Related Links below for more on the Eagle Eyes project.)

1 S.Y. Cheng, S. Park, M.M. Trivedi, Multi-spectral and Multi-perspective Video Arrays for Driver Body Tracking and Activity Analysis , Computer Vision and Image Understanding (2006), doi: 10.1016/j.cviu.2006.08.010

2 S.J. Krotosky, M.M. Trivedi, Mutual Information Based Registration of Multimodal Stereo Videos for Person Tracking , Computer Vision and Image Understanding (2006), doi: 10.1016/j.cviu.2006.10.008

Related Links

Computer Vision and Robotics Research Laboratory

Eagle Eyes News Release

Laboratory for Intelligent and Safe Automobiles (LISA)

'Smart Cars, Safe Cars' Article

Creating a New 'Driving Ecology' for Enhanced Auto Safety Article

Media Contacts

Media Contact: Doug Ramsey, 858-822-5825, dramsey@ucsd.edu