UC San Diego Unveils World?s Highest-Resolution Scientific Display System

Calit2 Also Releases New Version of CGLX Cluster-Based Visualization Framework

San Diego, CA, July 9, 2008 -- As the size of complex scientific data sets grows exponentially, so does the need for scientists to explore the data visually and collaboratively in ultra-high resolution environments. To that end, the California Institute for Telecommunications and Information Technology (Calit2) has unveiled the highest-resolution display system for scientific visualization in the world at the University of California, San Diego.

|

The Highly Interactive Parallelized Display Space (HIPerSpace) features nearly 287 million pixels of screen resolution – more than one active pixel for every U.S. citizen, based on the 2000 Census.

The HIPerSpace is more than 10 percent bigger (in terms of pixels) than the second-largest display in the world, constructed recently at the NASA Ames Research Center . That 256-million-pixel system, known as the hyperwall-2, was developed by the NASA Advanced Supercomputing Division at Ames , with support from Colfax International.

The expanded display at Calit2 is 30 percent bigger than the first HIPerSpace wall at UCSD, built in 2006. That system was moved to a larger location in Atkinson Hall, the Calit2 building at UCSD, where it was expanded by 66 million pixels to take advantage of the new space. The system was used officially for the first time on June 16 to demonstrate applications for a delegation from the National Geographic Society.

|

Calit2’s expanded HIPerSpace is an ultra-scale visualization environment developed on a multi-tile paradigm. The system features 70 high-resolution Dell 30” displays, arranged in fourteen columns of five displays each. Each 'tile' has a resolution of 2,560 by 1,600 pixels – bringing the combined, visible resolution to 35,640 by 8,000 pixels, or more than 286.7 million pixels in all. “By using larger, high-resolution tiles, we also have minimized the amount of space taken up by the frames, or bezels, of each display,” said Kuester. “Bezels will eventually disappear, but until then, we can reduce their distraction by keeping the highest possible ratio of screen area to each tile’s bezel.” Including the pixels hidden behind the bevels of each display, which give the "French door" appearance, the effective total image size is 348 million pixels.

|

“The HIPerSpace is the largest OptIPortal in the world,” said Calit2 Director Larry Smarr, a pioneer of supercomputing applications and principal investigator on the OptIPuter project. “The wall is connected by high-performance optical networking to the remote OptIPortals worldwide, as well as all of the compute and storage resources in the OptIPuter infrastructure, creating the basis for an OptIPlanet Collaboratory."

|

In addition to 10Gbps connectivity to resources at nine locations on the UCSD campus, including Calit2 and the San Diego Supercomputer Center (SDSC), the OptIPuter provides the HIPerSpace system with up to 2Gbps in dedicated fiber connectivity with its precursor HIPerWall at Calit2 on the UC Irvine campus (and its roughly 205 million pixels). As a result, scientists can gather simultaneously in front of the walls in San Diego and Irvine and explore, analyze and collaborate in unison while viewing real-time, rendered graphics of large data sets, video streams and telepresence videoconferencing across nearly half a billion pixels.

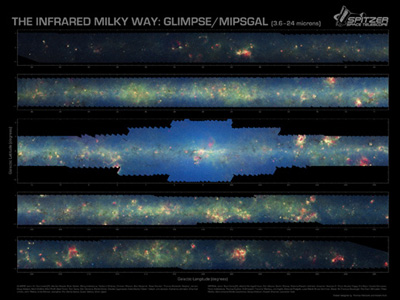

HIPerSpace is serving as a visual analytics research space with applications in Earth systems science, chemistry, astrophysics, medicine, forensics, art and archaeology, while enabling fundamental work in computer graphics, visualization, networking, data compression, streaming and human-computer interaction.

In particular, HIPerSpace is a research testbed for visualization frameworks needed for massive resolution digital wallpaper displays of the near future that will leverage bezel-free tiles and provide uninterrupted visual content.

Release of CGLX Version 1.2.1

The most notable of these frameworks is the Cross-Platform Cluster Graphics Library (CGLX), which introduces a new approach to high-performance hardware accelerated visualization on ultra-high-resolution display systems. It provides a cluster management framework, a development API as well as a selected set of cluster-ready applications. Coinciding with the launch of the expanded HIPerSpace system, Calit2 today announced the official release of CGLX version 1.2.1, available for downloading at http://vis.ucsd.edu/~cglx . "There is no reason why you need to start from scratch every time you want to program an application for a visualization cluster," said CGLX developer Kai-Uwe Doerr, project scientist in Kuester's lab. "CGLX was developed to enable everybody to write real-time graphics applications for visualization clusters. The framework takes care of networking, event handling, access to hardware-accelerated rendering, and some other things. Users can focus on writing their applications as if they were writing them for a single desktop.”

|

- Cross-platform, hardware-accelerated rendering (UNIX and Mac OSX support);

- Synchronized, multilayer OpenGL context support;

- Distributed event management; and

- Scalable multi-display support.

Applications using CGLX include a real-time viewer for gigapixel images and image collections, video playback, video streaming, and visualization of multi-dimensional models. The CGLX framework is already used by nearly all 90 megapixel-plus OptIPortals worldwide, and it is available for Linux (Fedora, RedHat, Suse), Rocks Cluster Systems (bundled in the hiperroll), and Mac OSX (leopard, tiger for ppc and Intel). CGLX is so flexible that it can even be scaled down to run on a commodity laptop. "With CGLX," explained Falko Kuester, "researchers can finally focus on solving demanding visualization and data analysis challenges on next-generation visual analytics cyberinfrastructure."

|

“These ultra-scale visualization techniques load data adaptively and progressively from network attached storage, requiring only a small local memory footprint on each display node, while avoiding data replication,” explained graduate-student Ponto. “All data is effectively loaded on demand in accordance with the locally available display resources.” Added fellow Computer Science and Engineering Ph.D. student Yamaoka: “A render node driving a single four-megapixel display, for example, will only fetch the data needed to fill that display at any given point in time. If the viewing position is updated, the needed data is again fetched, on demand.”

Related Links

GRAVITY Lab at UC San Diego

HIPerSpace

CGLX

NASA Spitzer Space Telescope Survey

NASA Blue Marble

Intel

nVIDIA

Dell

Media Contacts

Doug Ramsey, 858-822-5825, dramsey@ucsd.edu